Introduction to Generative AI

What is Generative AI?

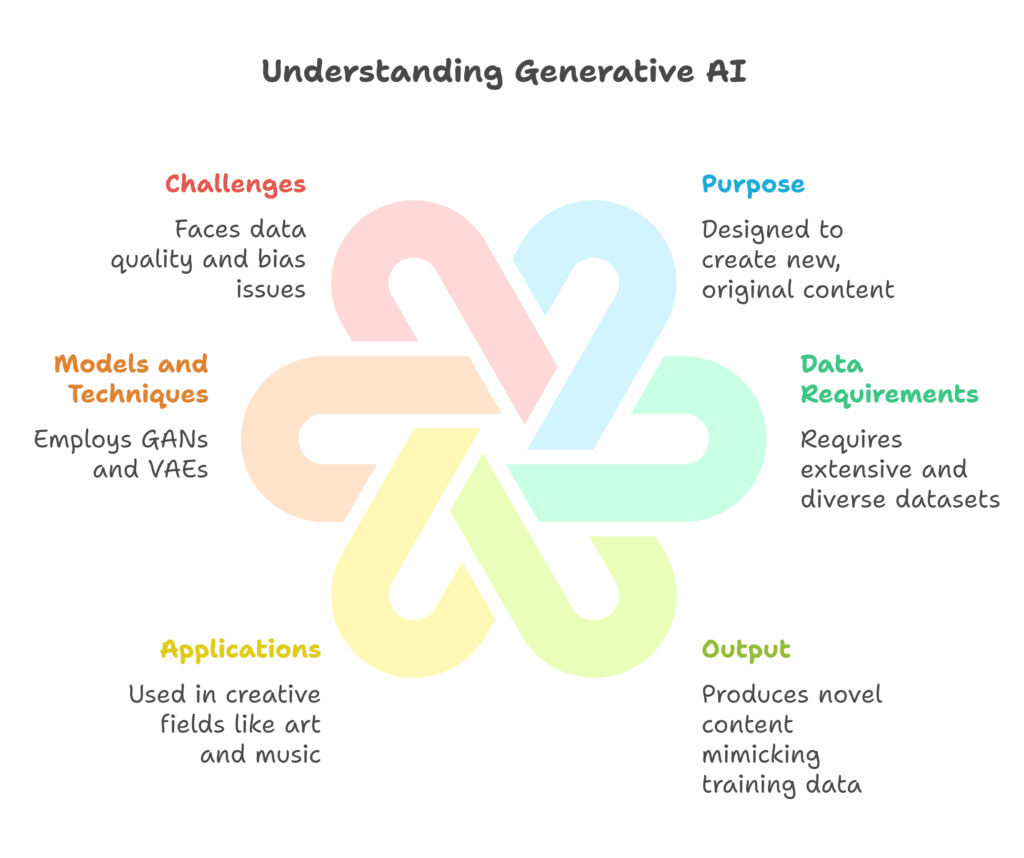

Generative AI refers to artificial intelligence systems designed to create original content—such as text, images, audio, video, or code—by learning patterns from existing data. Unlike traditional AI, which focuses on analyzing data to make predictions or classifications, generative models produce new outputs that mimic human creativity.

Key Characteristics:

- Creativity: Generates novel content (e.g., ChatGPT writing essays, DALL-E creating art).

- Adaptability: Fine-tuned for diverse tasks, from coding to medical research.

- Scalability: Produces vast amounts of content rapidly.

Generative AI Meaning:

- Core Function: Simulate human-like creativity by learning from datasets (e.g., books, images, code).

- Main Goal: Solve problems requiring innovation, such as designing molecules or composing music.

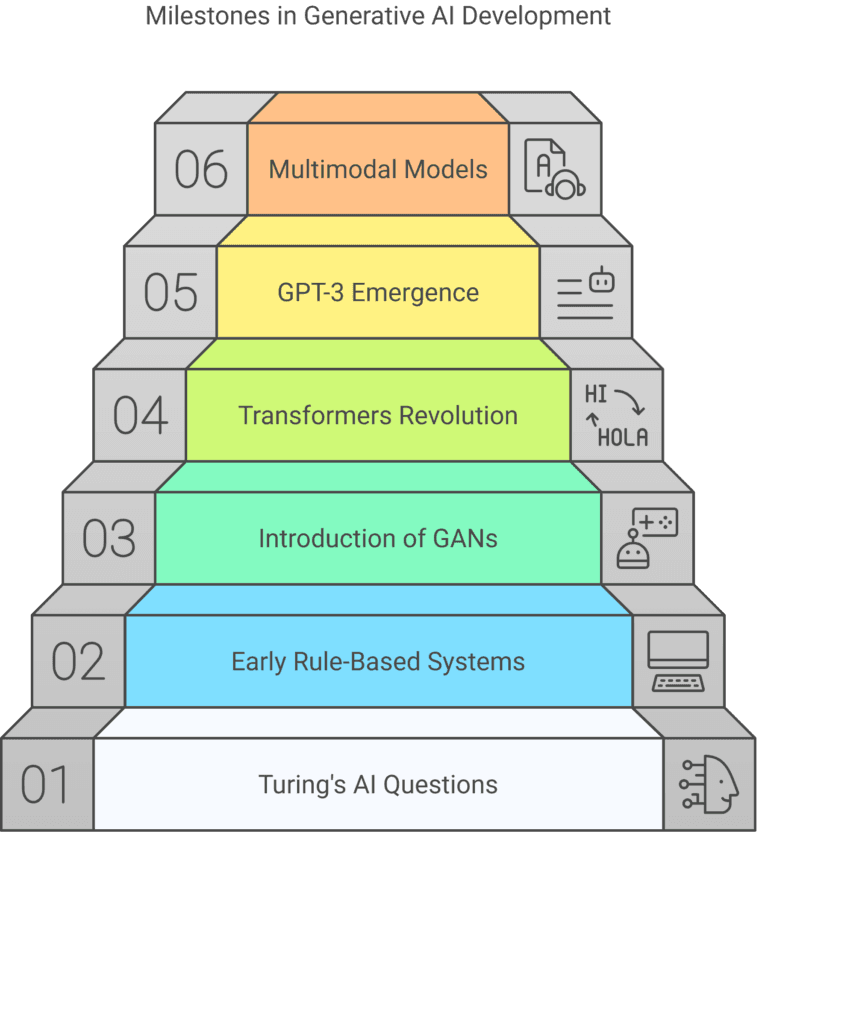

History of Generative AI

Generative AI has evolved through key milestones:

- 1950s: Alan Turing’s foundational work on machine intelligence raised questions about AI creativity.

- 1960s–1980s: Early rule-based systems like ELIZA (1966) simulated conversations but lacked true generative capability.

- 2014: Generative Adversarial Networks (GANs) were introduced by Ian Goodfellow, enabling realistic image generation.

- 2017: Transformers (Vaswani et al.) revolutionized natural language processing (NLP), leading to models like GPT.

- 2020: GPT-3 (OpenAI) demonstrated human-like text generation, sparking mainstream adoption.

- 2023: Multimodal models like Google Gemini combined text, image, and audio processing.

2. How Generative AI Works

Mechanics of Generative AI

How does generative AI work?

- Training Data: Models learn from vast datasets (e.g., Wikipedia, LAION-5B images).

- Architectures:

- Transformers: Used for text (e.g., ChatGPT). Process sequences using self-attention to predict the next word.

- Diffusion Models: Generate images by iteratively refining noise into coherent outputs (e.g., Stable Diffusion).

- GANs: Pit two neural networks (generator vs. discriminator) against each other to create realistic data.

- Inference: Users provide prompts (e.g., “Write a poem about the ocean”), and the model generates outputs.

Example Workflow:

- Input: “A futuristic cityscape at sunset.”

- Processing: The model references training data to predict pixel patterns or descriptive text.

- Output: A high-resolution image or detailed paragraph.

Pre-trained Multi-Task Generative AI Models:

- Definition: Large models trained on diverse data (text, code, images) and adapted for specific tasks.

- Examples:

- GPT-4: Generates text, translates languages, and writes code.

- PaLM (Google): Handles reasoning, coding, and creative writing.

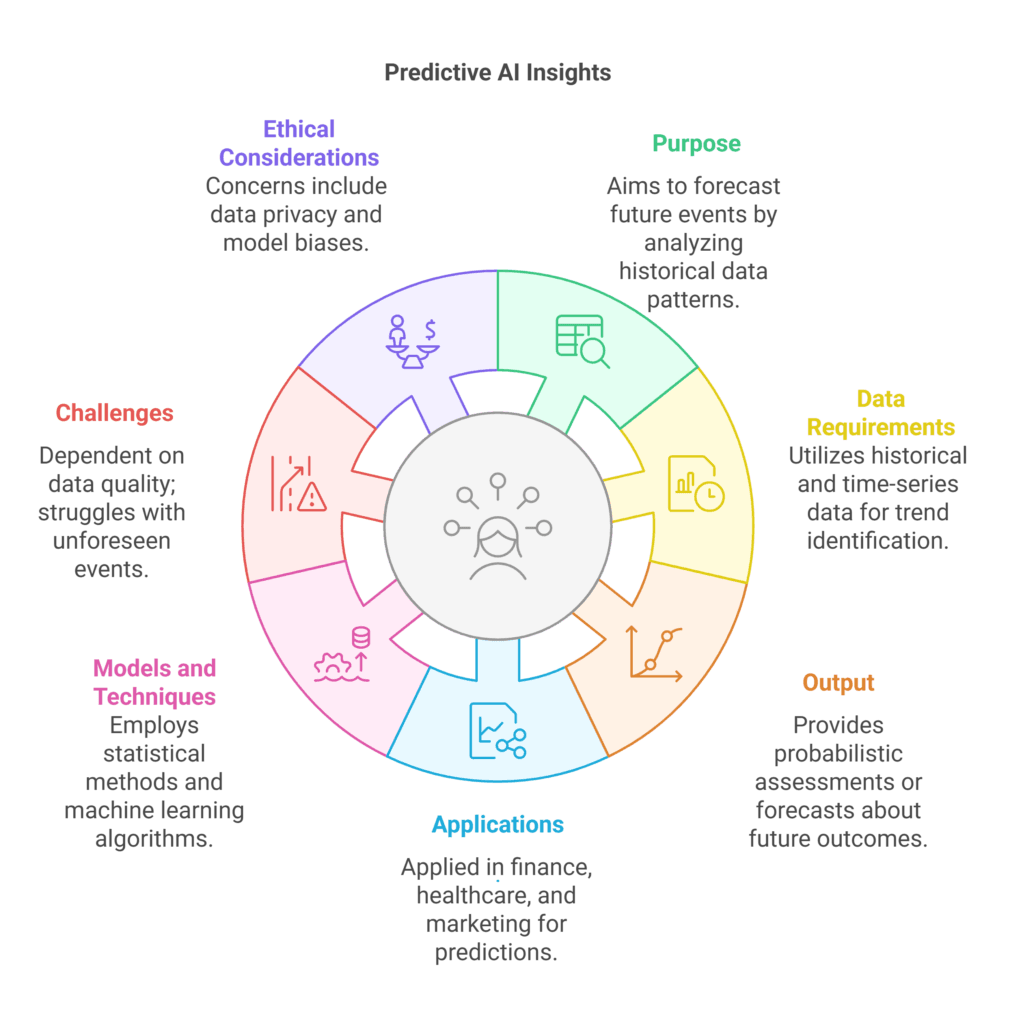

3. Generative AI vs. Predictive AI: Key Differences

Example:

- Generative: DALL-E creates a logo for a startup.

- Predictive: A bank uses AI to predict loan default risks.

4. Key Applications of Generative AI

Industry-Specific Use Cases

- Creative Arts:

- Adobe Generative AI: Tools like Firefly generate logos, videos, and marketing content.

- Music: Platforms like AIVA compose symphonies; OpenAI Jukebox creates songs in specific genres.

- Healthcare:

- Drug Discovery: Insilico Medicine used generative AI to design a fibrosis drug in 21 days.

- Medical Imaging: Models like AlphaFold predict protein structures to accelerate research.

- Software Development:

- Code Generation: GitHub Copilot (powered by OpenAI Codex) writes code snippets.

- Debugging: AI identifies and fixes bugs in real time.

- Security:

- Threat Detection: AI generates synthetic data to train fraud detection systems.

- Risks: Malicious actors use tools like WormGPT to craft phishing emails.

- Education:

- Personalized Learning: AI tutors like Khan Academy’s Khanmigo generate custom quizzes.

What Would Be an Appropriate Task for Using Generative AI?

- Marketing: Auto-generate ad copy or social media posts.

- Design: Create product prototypes via text prompts.

- Entertainment: Scriptwriting for films or video games.

What is One Thing Current Generative AI Applications Cannot Do?

- Human Nuance: Understand sarcasm, cultural context, or emotional subtleties reliably.

5. Foundation Models in Generative AI

What Are Foundation Models?

Foundation models are large-scale AI systems pre-trained on broad datasets and adapted for specific tasks. They form the “foundation” for specialized applications.

Examples:

- GPT-4: Text generation, translation, and summarization.

- Stable Diffusion: Image generation from text prompts.

- CLIP (OpenAI): Links text and images for cross-modal understanding.

Challenges:

- Data Bias: Models may replicate biases in training data (e.g., gender stereotypes).

- Compute Costs: Training GPT-4 required millions of dollars in cloud resources.

Pre-trained Multi-Task Models:

- Definition: Models like T5 (Google) handle translation, summarization, and Q&A without task-specific training.

6. Ethical Considerations and Challenges

Key Ethical Issues

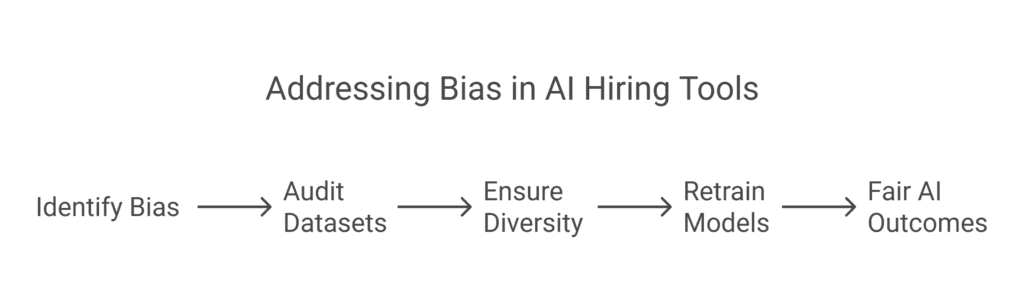

- Bias and Fairness:

- Example: An AI hiring tool favored male candidates due to biased historical data.

- Solution: Audit datasets for diversity and retrain models.

- Misinformation:

- Deepfakes: AI-generated videos of politicians making false statements.

- Countermeasures: Tools like Microsoft Video Authenticator detect synthetic media.

- Intellectual Property:

- Ownership: Who owns AI-generated art? Courts are debating copyright laws.

- Security:

- Malware: Hackers use AI to write polymorphic viruses.

Why is Controlling the Output of Generative AI Systems Important?

- To prevent harmful content (e.g., hate speech, fake news) and ensure compliance with laws like the EU’s AI Act.

Best Practices:

- Human Oversight: Review AI outputs before deployment.

- Watermarking: Tag AI-generated content for transparency (e.g., Google SynthID).

7. The Future of Generative AI

Emerging Trends

- Multimodal Models: Systems like Google Gemini process text, images, and audio simultaneously.

- Small Language Models (SLMs): Efficient models (e.g., Microsoft Phi-3) for niche tasks.

- Regulation: The EU AI Act mandates transparency for generative AI systems.

Debates:

- Is Generative AI Overhyped? While transformative, it struggles with accuracy and context.

- Can Generative AI Replace Humans? It augments creativity but lacks empathy and ethical judgment.

8. How to Learn Generative AI: Courses and Resources

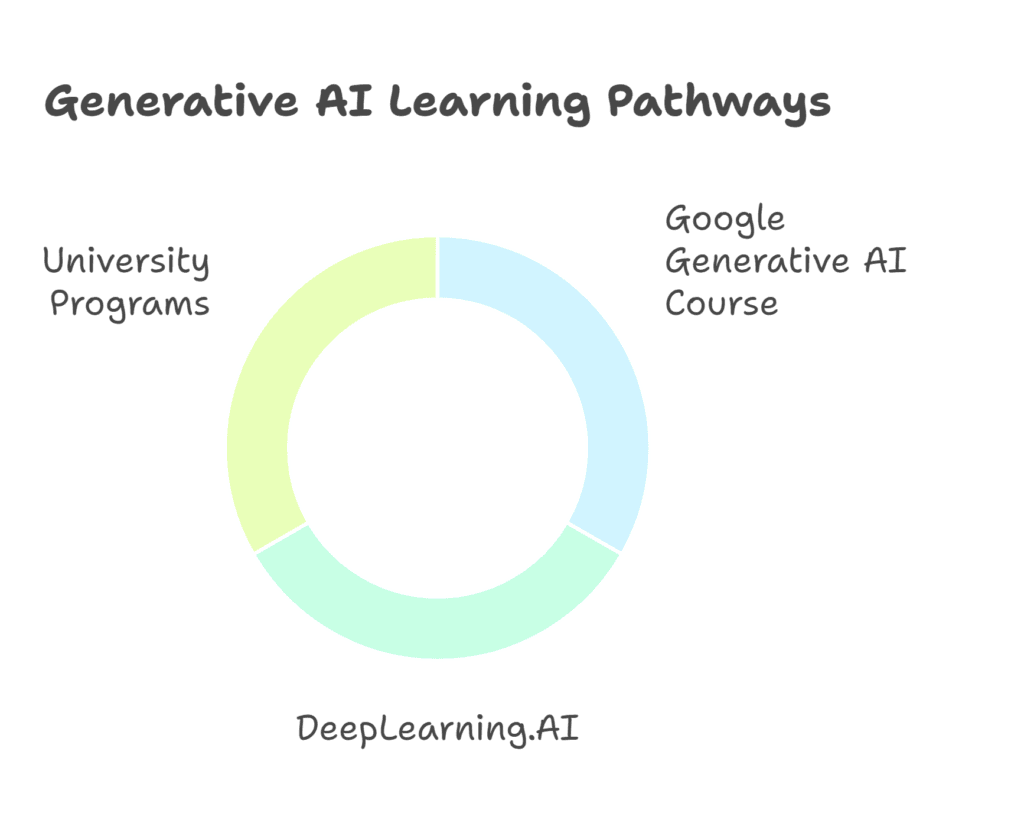

Generative AI Courses

- Google Generative AI Course: Free modules on Coursera cover basics to advanced topics.

- DeepLearning.AI: Offers specialized courses like ChatGPT Prompt Engineering for Developers.

- University Programs: Stanford’s AI Professional Program includes generative AI modules.

Where to Learn Generative AI:

- Platforms: Coursera, Udacity, edX.

- Certifications: AWS Certified Machine Learning, NVIDIA DLI.

9. FAQs About Generative AI

Q1: What Type of Data is Generative AI Most Suitable For?

A: Unstructured data (text, images, audio) rather than structured tables.

Q2: What is the Primary Goal of a Generative AI Model?

A: To produce original, human-like content (e.g., stories, art, code).

Q3: How Has Generative AI Affected Security?

A: It enables sophisticated cyberattacks but also improves threat detection.

Q4: Can I Generate Code Using Generative AI Models?

A: Yes—tools like Amazon CodeWhisperer automate coding tasks.

Q5: What Challenge Does Generative AI Face with Respect to Data?

A: Ensuring training data is diverse, unbiased, and legally sourced.

10. References and Further Reading

Books:

The Age of AI by Henry Kissinger (discusses societal impacts).

Generative Deep Learning by David Foster (technical guide).

Research:

“Attention Is All You Need” (Vaswani et al., 2017) – Transformers paper.

Courses:

Leave a Reply